Dynamic Routing in LangGraph

By Xavier Collantes

11/9/2025

- LangGraph is a framework that lets you write and manage "nodes" which perform individual tasks connected by LangGraph.

- LangGraph handles conditional statements for the next node depending on the current node's conditions (like IF-ELSE).

- The "State" is also managed by LangGraph which is a data store accessible to all nodes.

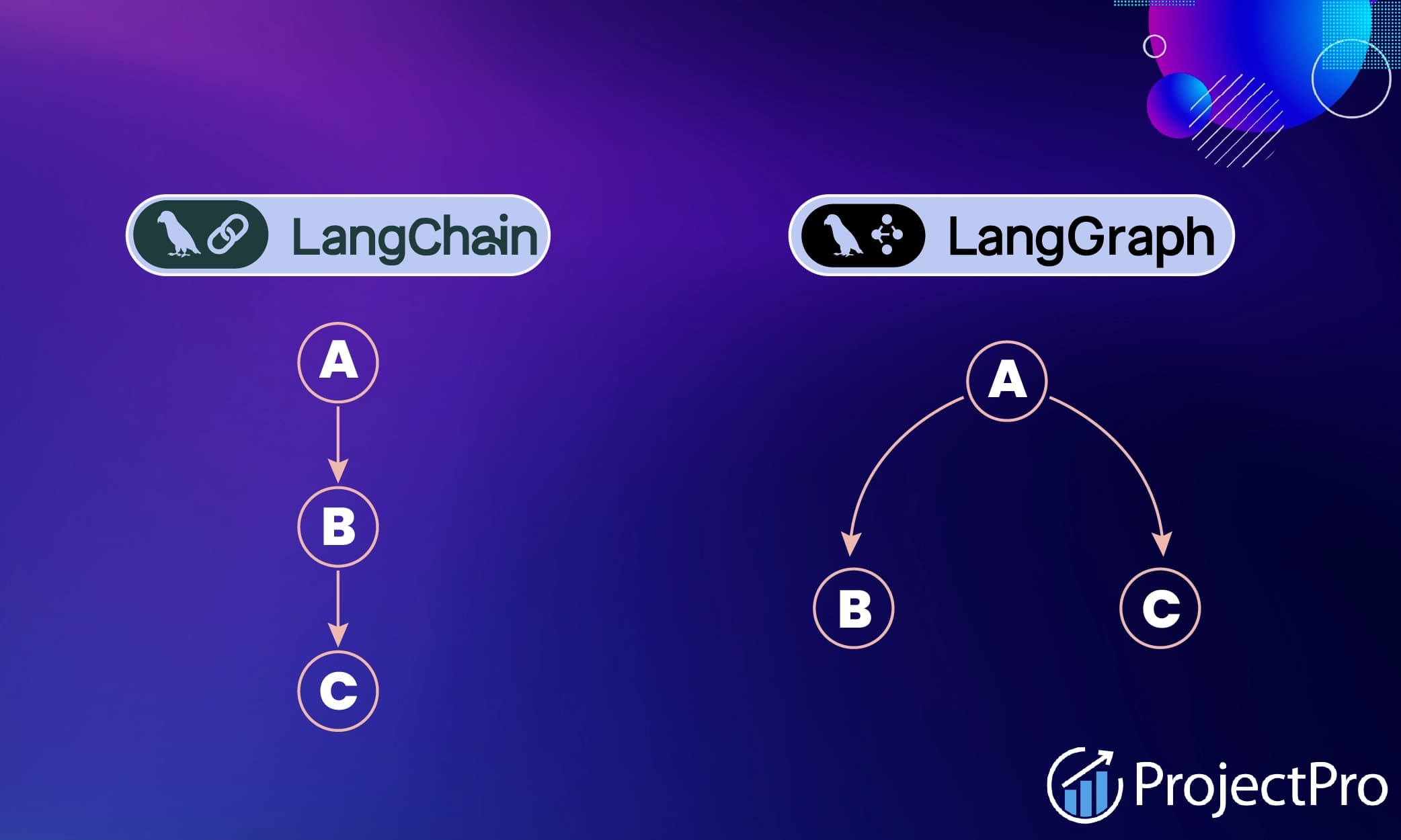

LangChain (1-Dimension) vs LangGraph (2-Dimension)

- Linear execution flow (A -> B -> C)

- Great for simple pipelines

LangChain can technically support many core features of LangGraph, for example you can use `MultiRouteChain` for dynamic routing. But LangGraph is the next iteration or spinoff for those features.

- Conditional branching

- Built-in state persistence

- Dynamic routing based on runtime data

Use Case: IT Support Ticket Handling

The State Model

Defining the DESCRIPTION in Pydantic models is a good practice for your fellow developers but also for the LLM to understand the data as many LLMs take a Pydantic model as input when you require structured output.

1import uuid

2from enum import Enum

3from pydantic import BaseModel, Field

4

5

6class Intent(Enum):

7 """Classification of user intent."""

8 PASSWORD = "PASSWORD"

9 FEATURE_REQ = "FEATURE_REQ"

10 BUG = "BUG"

11 BILLING = "BILLING"

12 # Good practice to have a catch-all for unknown cases.

13 OTHER = "OTHER"

14

15

16class ContentClassify(BaseModel):

17 """Structured output for email classification."""

18 intent: Intent = Field(

19 description="The classified intent of the email which the choices are given in the Intent enum."

20 )

21 requestor_email: str = Field(

22 description="Email address of the sender which is the email address of the user requesting support."

23 )

24 requestor_name: str = Field(

25 description="Name of the sender which is the name of the user requesting support."

26 )

27

28

29# Personally I tend to have most fields default to None since the initial state

30# of the graph is empty.

31class TicketState(BaseModel):

32 """State persists throughout the graph execution."""

33 ticket_id: str = Field(

34 default_factory=lambda: str(uuid.uuid4()),

35 description="Unique identifier for the ticket.",

36 )

37 email_body: str | None = Field(

38 default=None,

39 description="The body of the email from the user.",

40 )

41 intent: Intent | None = Field(

42 default=None,

43 description="The intent of the user requesting support.",

44 )

45 requestor_email: str | None = Field(

46 default=None,

47 description="The email address of the user requesting support.",

48 )

49 requestor_name: str | None = Field(

50 default=None,

51 description="The name of the user requesting support.",

52 )

53 email_draft: str | None = Field(

54 default=None,

55 description="The draft email to be sent to the user.",

56 )

57Building the Graph

1from langgraph.checkpoint.sqlite import SqliteSaver

2from langgraph.graph import START, END, StateGraph

3

4# Initialize graph with state model

5graph: StateGraph = StateGraph(TicketState)

6

7# Add nodes

8graph.add_node("classify_content", classify_content)

9graph.add_node("search_docs", search_docs)

10graph.add_node("create_feature_request", create_feature_request)

11graph.add_node("human_review", human_review)

12graph.add_node("draft_email", draft_email)

13graph.add_node("send_email", send_email)

14

15# Entry point.

16# START is required by LangGraph but END is optional.

17graph.add_edge(START, "classify_content")

18

19# You can define edges in the graph.add_edge(...) method or you can define the

20# next node in the Command object as return on node function.

21

22# Compile with state persistence

23with SqliteSaver.from_conn_string("checkpoints.db") as checkpointer:

24 compiled_graph = graph.compile(checkpointer=checkpointer)

25There are many different options for the checkpointer from LangGraph. For example, you can use the `MemorySaver` checkpointer to save the state in memory or the `RedisSaver` checkpointer to save the state in Redis.

Dynamic Routing With Conditionals

classify_content node uses LLM classification to determine the next node

dynamically:1from langchain_core.runnables import Runnable

2from langgraph.types import Command

3

4def classify_content_node(state: TicketState) -> Command:

5 """Classify email and route to appropriate handler."""

6

7 # Use structured LLM output for classification

8 structured_llm: Runnable = llm.with_structured_output(ContentClassify)

9

10 prompt = f"""

11 Classify the support email and determine severity.

12

13 Email contents:

14 {state.email_body}

15 """

16

17 classify_result = structured_llm.invoke(prompt)

18

19 # Dynamic routing based on intent

20 if classify_result.intent == Intent.PASSWORD:

21 next_node = "draft_email" # Simple password reset

22 elif classify_result.intent == Intent.FEATURE_REQ:

23 next_node = "create_feature_request" # Track in backlog

24 elif classify_result.intent == Intent.BUG:

25 next_node = "search_docs" # Check for known issues

26 else:

27 next_node = "human_review" # Complex cases need humans

28

29 return Command(

30 update={

31 "intent": classify_result.intent,

32 "requestor_email": classify_result.requestor_email,

33 "requestor_name": classify_result.requestor_name,

34 },

35 goto=next_node,

36 )

37Process Flow

- Classification Node: Analyzes the email with an LLM to extract intent

- Conditional Logic: Uses

if-elseto determine the next node - Command Object: Returns

Command(update={...}, goto=next_node)for dynamic routing - State Updates: Passes extracted data to downstream nodes via state

Downstream Handlers

1def search_docs_node(state: TicketState) -> Command:

2 """Search documentation for bug solutions."""

3

4 # Performing a search but doesn't have to be this action necessarily.

5 search_results = vector_store.similarity_search(state.email_body)

6

7 return Command(

8 # Edit the state with the search results.

9 update={"search_results": search_results},

10 goto="draft_email"

11 )

12

13def create_feature_request_node(state: TicketState) -> Command:

14 """Create feature request in project tracker."""

15 # Create Jira ticket or similar

16 return Command(goto="draft_email")

17

18def human_review_node(state: TicketState) -> Command:

19 """Pause for human intervention."""

20 # Save state and wait for human input

21 human_verdict = interrupt(state.model_dump())

22 return Command(goto="draft_email")

23draft_email, which uses the state to generate

appropriate responses.Execution

1# Create config for thread persistence

2config = {"configurable": {"thread_id": "customer_123"}}

3

4# Initial state

5initial_state: TicketState = TicketState(

6 email_body="I forgot my password and can't log in!"

7)

8

9# Execute graph

10result = compiled_graph.invoke(initial_state, config)

11draft_email for password resets, but would

take different paths for bugs or feature requests.Xavier's Experience

Command(goto=...) to route based on runtime

conditions will override the graph.add_edge(...) definition for next node.1# Initial state

2initial_state: TicketState = TicketState(

3 email_body="I forgot my password and can't log in!"

4)

5ContentClassify model is used to classify the email content by being

specified when the LLM is called.For many LLMs including LangChain/LangGraph, you CANNOT specify an Enum as the specified output type for structured outputs. It MUST be wrapped in an object.

1class ContentClassify(BaseModel):

2 """Structured output for email classification."""

3 intent: Intent = Field(

4 description="The classified intent of the email which the choices are given in the Intent enum."

5 )

6 requestor_email: str = Field(

7 description="Email address of the sender which is the email address of the user requesting support."

8 )

9 requestor_name: str = Field(

10 description="Name of the sender which is the name of the user requesting support."

11 )

12SqliteSaver checkpointer saves state between nodes,

enabling human-in-the-loop workflows that can pause and resume.Further Reading

Related Articles

Related by topics: